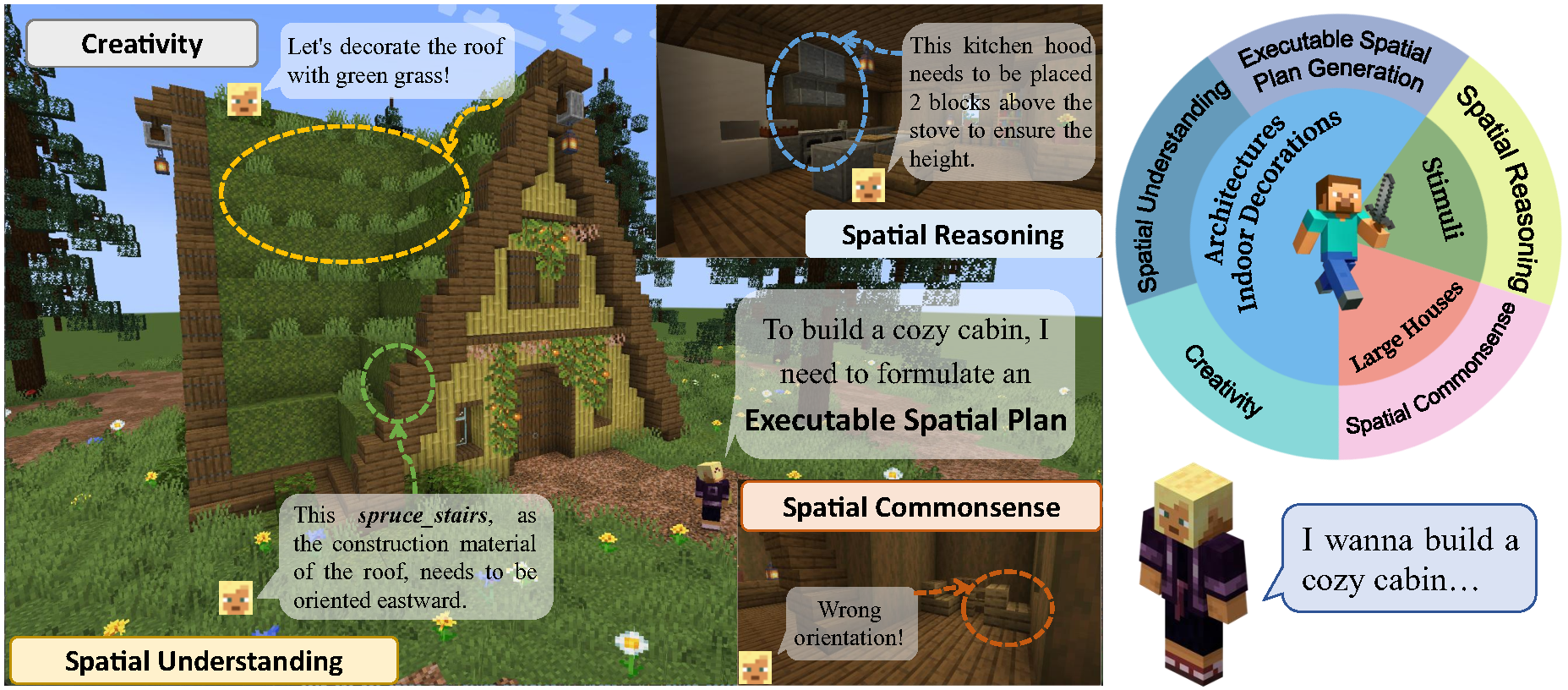

Spatial Planning is a crucial part in the field of spatial intelligence, which requires the understanding and planning about object arrangements in space perspective. AI agents with the spatial planning ability can better adapt to various real-world applications, including robotic manipulation, automatic assembly, urban planning etc. Recent works have attempted to construct benchmarks for evaluating the spatial intelligence of Multimodal Large Language Models (MLLMs). Nevertheless, these benchmarks primarily focus on spatial reasoning based on typical Visual Question-Answering (VQA) forms, which suffers from the gap between abstract spatial understanding and concrete task execution. In this work, we take a step further to build a comprehensive benchmark called MineAnyBuild, aiming to evaluate the spatial planning ability of open-world AI agents in the Minecraft game. Specifically, MineAnyBuild requires an agent to generate executable architecture building plans based on the given multi-modal human instructions. It involves 4,000 curated spatial planning tasks and also provides a paradigm for infinitely expandable data collection by utilizing rich player-generated content. MineAnyBuild evaluates spatial planning through four core supporting dimensions: spatial understanding, spatial reasoning, creativity, and spatial commonsense. Based on MineAnyBuild, we perform a comprehensive evaluation for existing MLLM-based agents, revealing the severe limitations but enormous potential in their spatial planning abilities. We believe our MineAnyBuild will open new avenues for the evaluation of spatial intelligence and help promote further development for open-world AI agents capable of spatial planning.

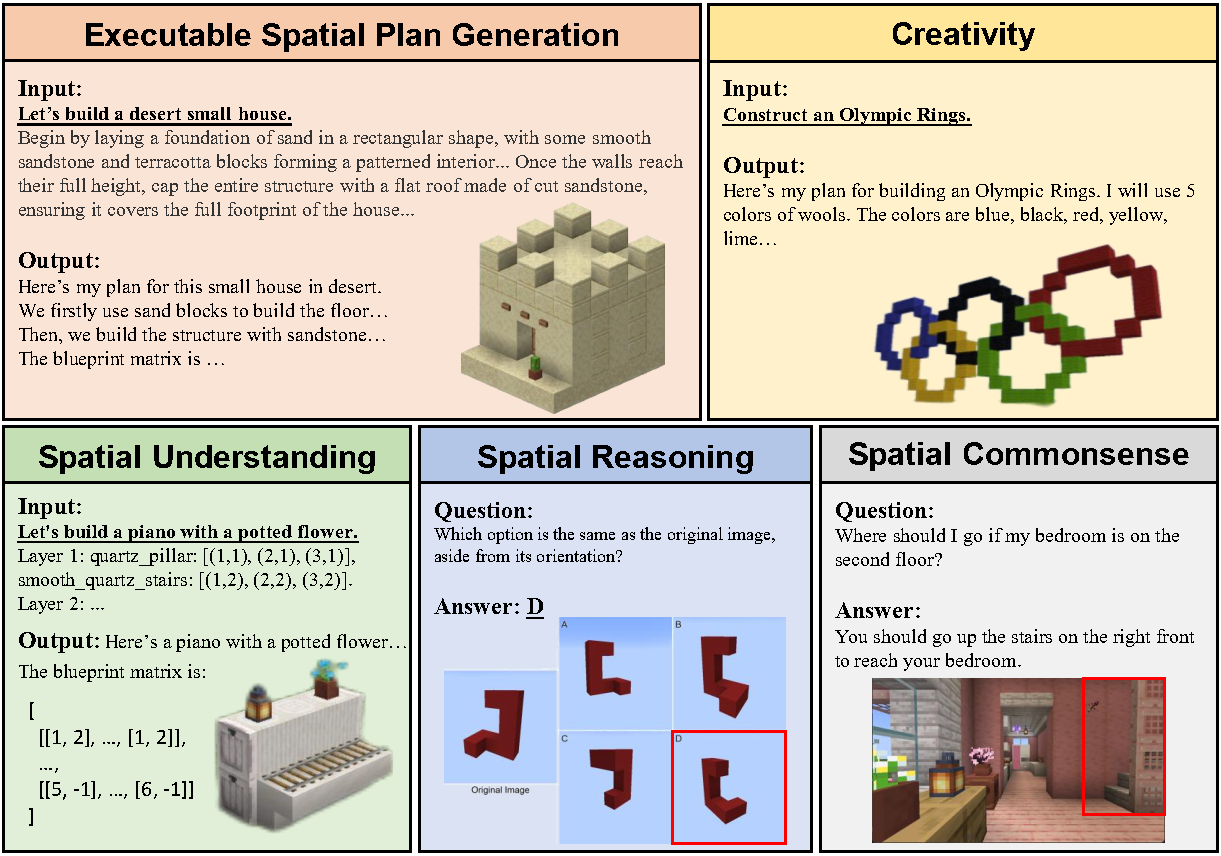

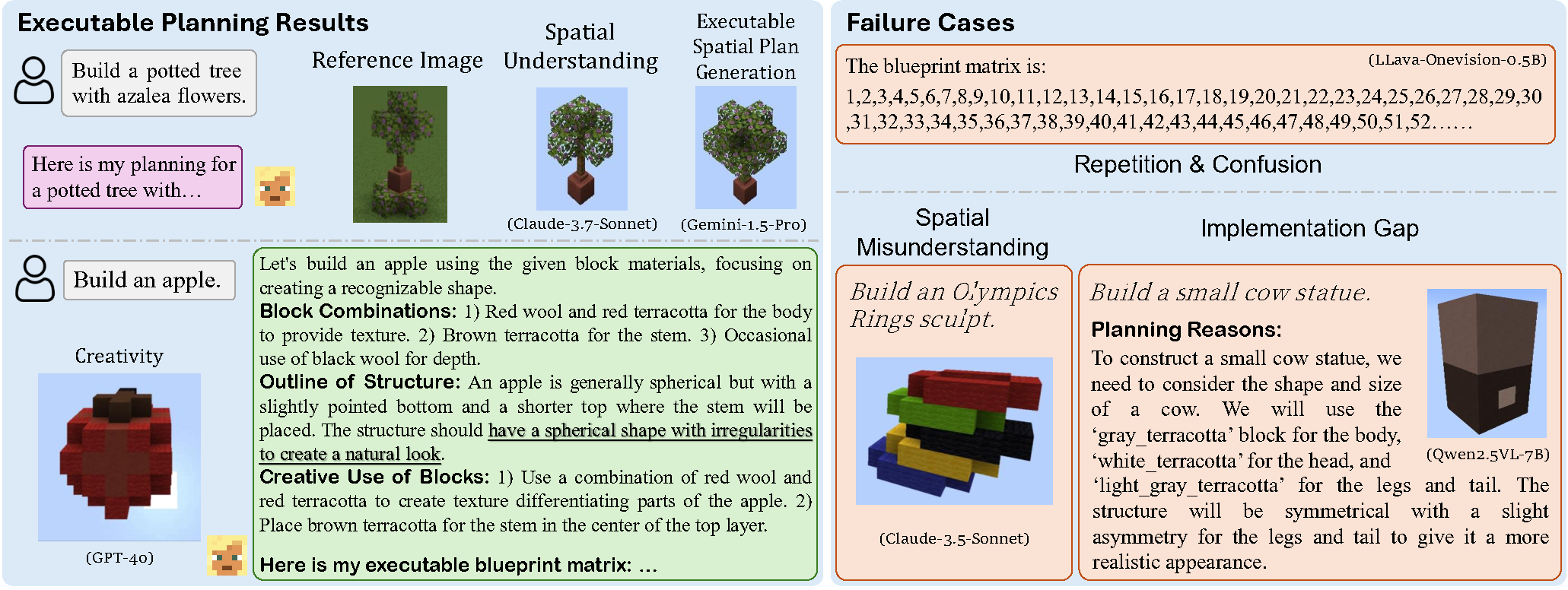

Our MineAnyBuild involves approximately 4,000 spatial planning tasks with 500+ buildings/indoor decoration assets. These tasks, including Executable Spatial Plan Generation, Spatial Understanding, Creativity, Spatial Reasoning, and Spatial Commonsense, correspond to diverse evaluation dimensions, thereby conducting a comprehensive assessment of AI agents’ spatial planning capabilities. In Executable Spatial Plan Generation, Spatial Understanding, and Creativity tasks, the agent needs to generate executable spatial plans for building an architecture according to the given instruction. While in Spatial Reasoning and Spatial Commonsense tasks, we introduce ~2,000 VQA pairs, where we ask the agent to answer the given questions accompanied by the related images.

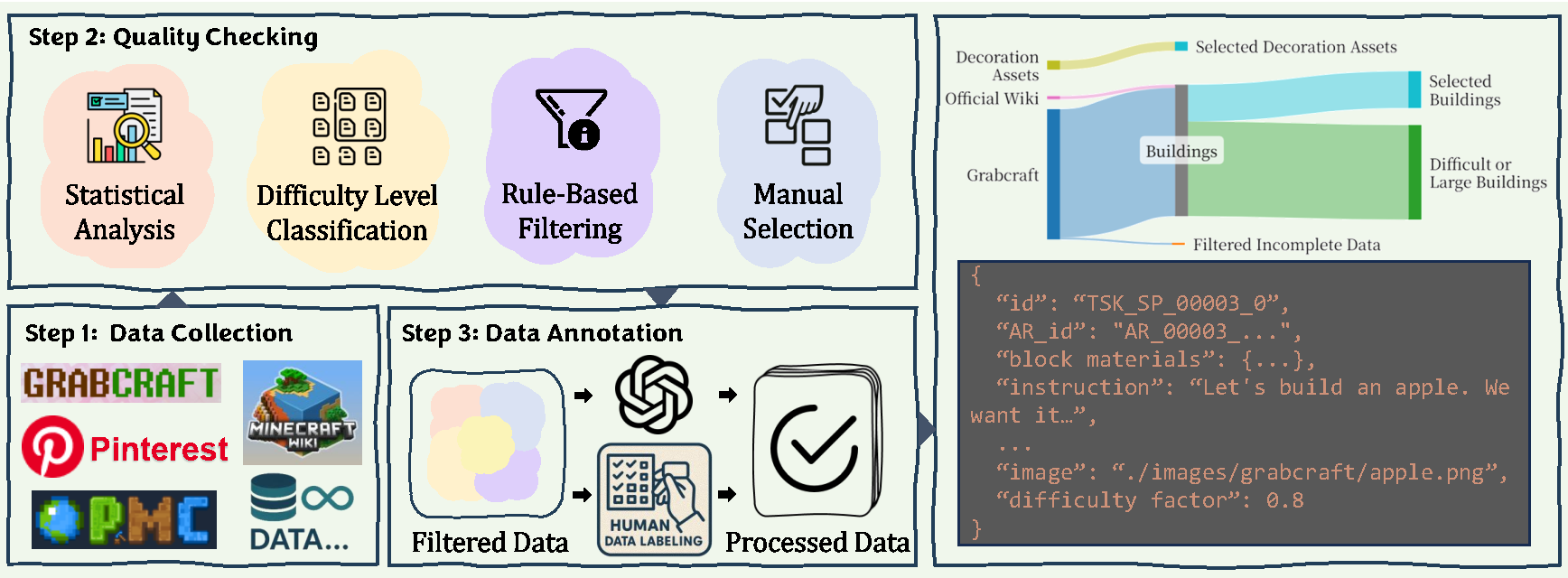

Data curation pipeline of MineAnyBuild. We conduct three core steps to curate our datasets: data collection, quality checking, and data annotation. On the right side, a Sankey diagram showing our data processing flow is presented, along with an example of simplified format of processed data.

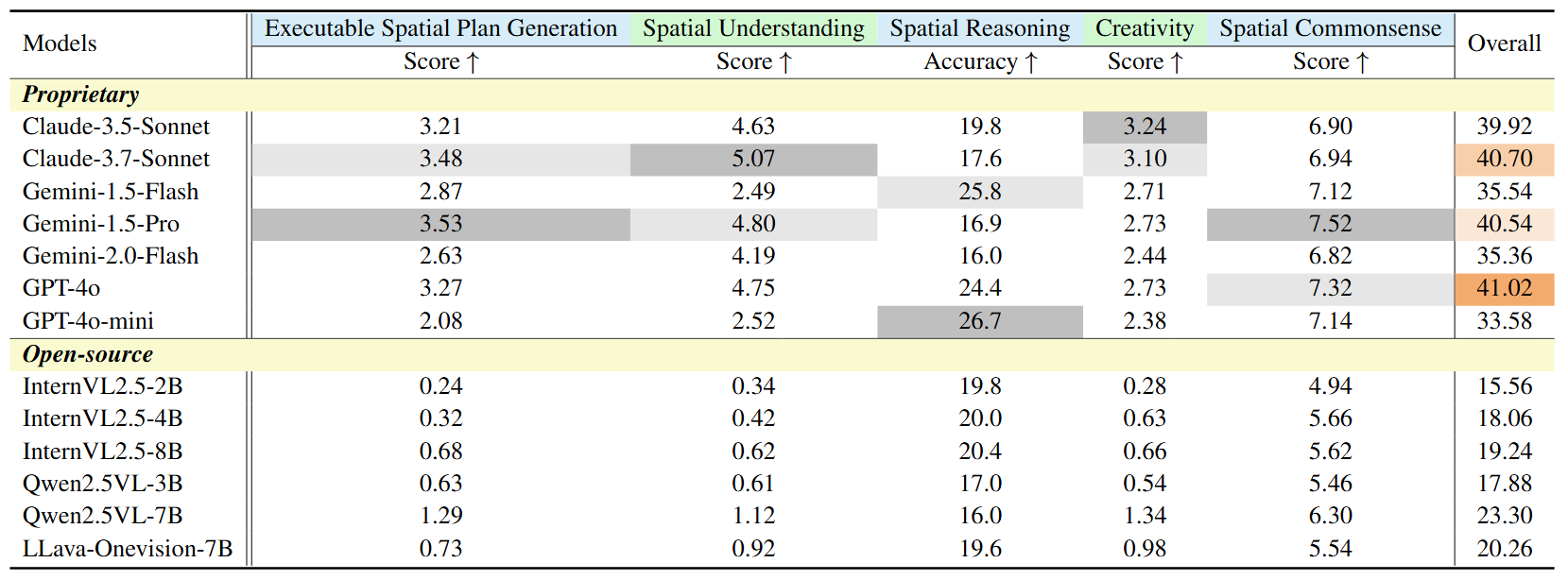

Evaluation results of AI agents on MineAnyBuild. Gray indicates the best performance of each evaluation dimension among all agents and Light Gray indicates the second best results. We also highlight the top three agents based on their overall performance with Dark Orange, Orange, Light Orange, respectively.

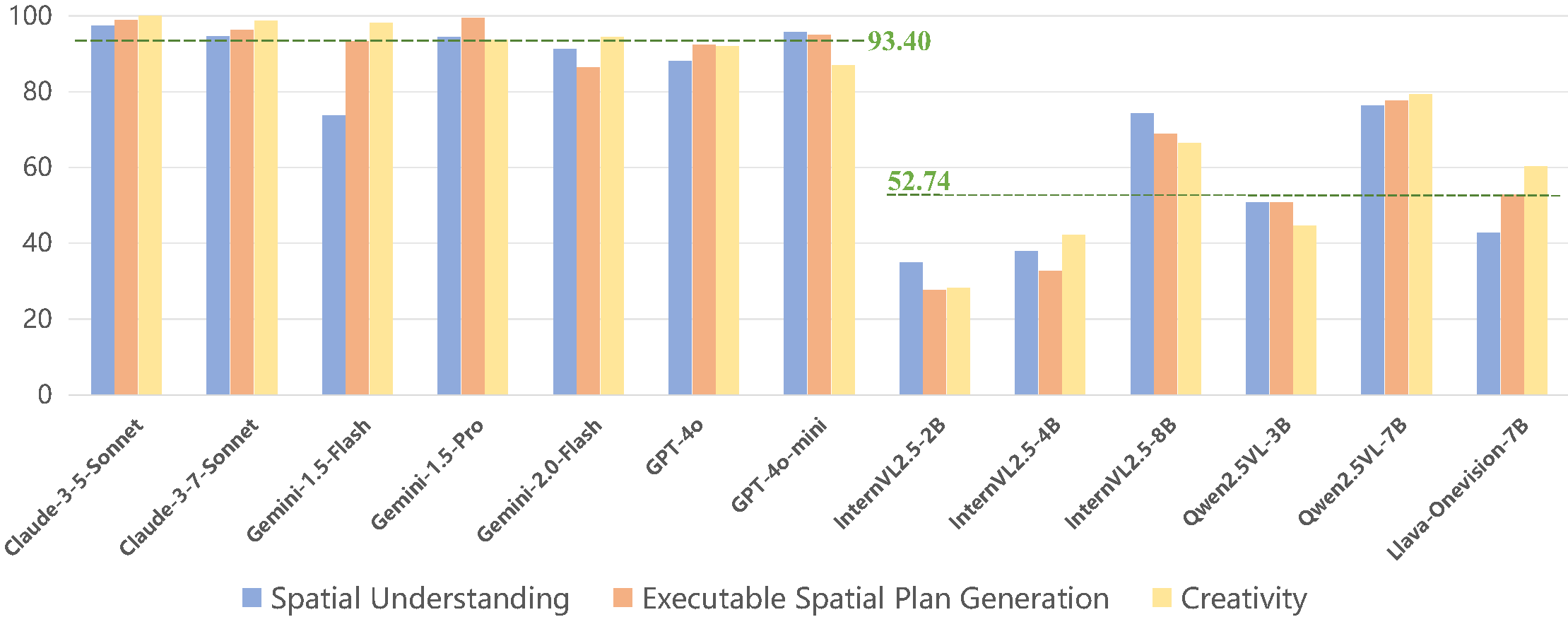

Bar chart of Output Success Rate (OSR) for MLLMs. Two green dotted lines indicate the average OSR of proprietary models and open-source models, respectively.

@inproceedings{

wei2025mineanybuild,

title={MineAnyBuild: Benchmarking Spatial Planning for Open-world {AI} Agents},

author={Ziming Wei and Bingqian Lin and Zijian Jiao and Yunshuang Nie and Liang Ma and Yuecheng Liu and Yuzheng Zhuang and Xiaodan Liang},

booktitle={The Thirty-ninth Annual Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

year={2025}

}